Section: New Results

Teleoperation

Shared Control for Remote Manipulation

Participants : Firas Abi Farraj, Paolo Robuffo Giordano.

This work concerns our activities in the context of the RoMaNS H2020 project (see Section 9.3.1.3). Our main goal is to allow a human operator to be interfaced in an intuitive way with a two-arm system, one arm carrying a gripper (for grasping an object), and the other one carrying a camera for looking at the scene (gripper + object) and providing the needed visual feedback. The operator should be allowed to control the two-arm system in an easy way for letting the gripper approaching the target object, and she/he should also receive force cues informative of how feasible her/his commands are w.r.t. the constraints of the system (e.g., joint limits, singularities, limited camera fov, and so on).

We have started working on this topic by proposing a shared control architecture in which the operator could provide instantaneous velocity commands along four suitable task-space directions not interfering with the main task of keeping the gripper aligned towards the target object (this main task was automatically regulated). The operator was also receiving force cues informative of how much her/his commands were conflicting with the system constraints, in our case joint limits of both manipulators. Finally, the camera was always moving so as to keep both the gripper and the target object at two fixed locations on the image plane. Recently, we have extended this framework in several directions:

-

in a first extension, the existing instantaneous interface has been improved towards an “integral” approach in which the user can command parts of the future manipulator trajectory, while the autonomy makes sure that no constraint is violated (in this case we considered, again, joint limits and singularities, as well as a more realistic vision constraint for keeping the gripper and the object always in visibility and not overlapping). This shared control algorithm was validated in simulation in [58]. We are currently completing a full implementation on our dual-arm system (the two Viper robots);

-

second, we have studied how to integrate learning from demonstration into our framework by first using learning techniques for extracting statistical regularities of “expert users” executing successful trajectories for the gripper towards the target object. Then, these learned trajectories were used for generating force cues able to guide novice users during their teleoperation task by the “hands” of the expert users who demonstrated the trajectories in the first place [37];

-

third, we have considered a grasping scenario in which a post-grasp task is specified (e.g., the grasped object needs to follow a predefined trajectory): in this scenario, the operator (supported by the robot autonomy) needs to decide where to best grasp in order to then execute the desired post-grasp action. However, different grasping poses will result in easier/harder execution by the robot because of any possible constraint (e.g., joint limits and singularities). Since awareness of these constraints is hard for any operator, in this case the autonomy component cues the operator with a force feedback indicating the best grasp pose w.r.t. the existing constraints and post-grasp task. The operator has still control over where to grasp, but she/he is guided by the force feedback into more feasible grasp poses than what she/he could have guessed without any feedback [48];

-

finally, we have considered the task of assisting an operator in control of a UAV which is mapping a remote environment with an onboard camera. In this scenario the operator can control the UAV motion during the mapping task. However, as in any estimation problem, different motions will result less/more optimal w.r.t. the scene estimation task: therefore, a force feedback is produced in order to assist the operator in selecting the UAV motion (in particular, its linear velocity) that also results optimal for the sake of facilitating the scene estimation process. The results have been validated with numerical simulations in a realistic environment [39].

Wearable haptics

Participants : Marco Aggravi, Claudio Pacchierotti.

Kinesthetic haptic feedback is used in robotic teleoperation to provide the human operator with force information about the status of the slave robots and their interaction with the remote environment. Although kineshetic feedback has been proven to enhance the performance of teleoperation systems, it still shows several limitations, including its negative effect on the safety and stability of such systems, or the limited workspace, available DoF, high cost, and complexity of kinesthetic interfaces. In this respect, wearable haptics is gaining great attention. Safe, compact, unobtrusive, inexpensive, easy-to-wear, and lightweight haptic devices enable researchers to provide compelling touch sensations to multiple parts of the body, significantly increasing the applicability of haptics in many fields, such as robotics, rehabilitation, gaming, and immersive systems.

In this respect, our objective has been to study, design, and evaluate novel wearable haptic interfaces for the control of remote robotic systems as well as interacting with virtual immersive environments.

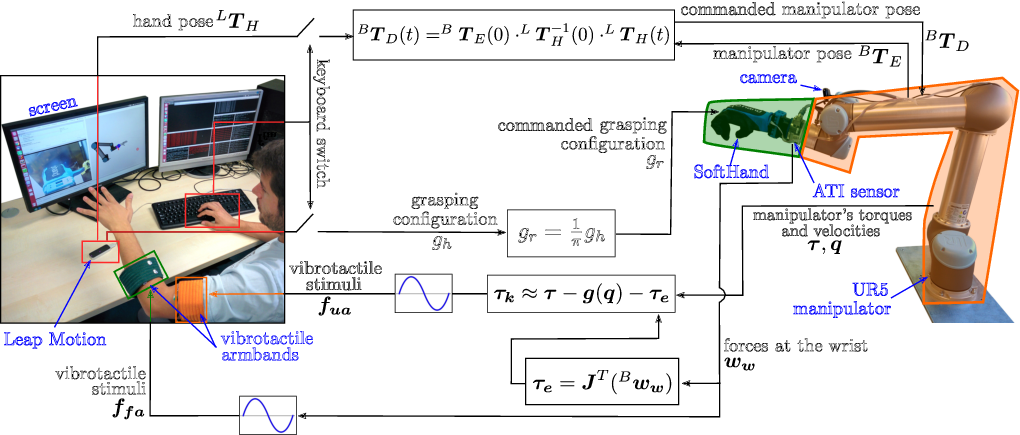

We have started by working on a multi-point wearable feedback solution for robotic manipulators operating in a cluttered environment [40]. The slave system is composed of an anthropomorphic soft robotic hand attached to a 6-axis force-torque sensor, which is in turn fixed to a 6-DoF robotic arm. The master system is composed of a Leap Motion controller and two wearable vibrotactile armbands, worn on the forearm and upper arm. The Leap Motion tracks the user's hand pose to control the pose of the manipulator and the grasping configuration of the robotic hand. The armband on the forearm conveys information about collisions of the slave hand/wrist system (green patch to green armband, see Fig. 8), whereas the armband on the upper arm conveys information about collisions of the slave arm (orange patch to orange armband). The amplitude of the vibrotactile feedback relayed by the armbands is proportional to the interaction force of the collision. A camera mounted near the manipulator's end-effector enables the operator to see the environment in front of the robotic hand. To validate our system, we carried out a human subjects telemanipulation experiment in a cluttered scenario. Twelve participants were asked to control the motion of the robotic manipulator to grasp an object hidden between debris of various shapes and stiffnesses. Haptic feedback provided by our wearable devices significantly improved the performance of the considered telemanipulation tasks. Finally, all subjects but one preferred conditions with wearable haptic feedback.

|

We have also used wearable haptics for guidance [20]. In this context, haptic feedback is not used to provide information about a force exerted by the salve robot in the remote environment, but it provides guidance cues about a predetermined trajectory to follow. Toward this, we developed a novel wearable device for the forearm. Four cylindrical rotating end effectors, located on the user's forearm, can generate skin stretch at the ulnar, radial, palmar, and dorsal sides of the arm. When all the end effectors rotate in the same direction, the cutaneous device is able to provide cues about a desired pronation/supination of the forearm. On the other hand, when two opposite end effectors rotate in opposite directions, the device is able to provide cutaneous cues about a desired translation of the forearm. Combining these two stimuli, we can provide both rotation and translation guidance. To evaluate the effectiveness of our device in providing navigation information, we carried out two experiments of haptic navigation. In the first one, subjects were asked to translate and rotate the forearm toward a target position and orientation, respectively. In the second experiment, subjects were asked to control a 6-DoF robotic manipulator to grasp and lift a target object. Haptic feedback provided by our wearable device improved the performance of both experiments with respect to providing no haptic feedback. Moreover, it showed similar performance with respect to sensory substitution via visual feedback, without overloading the visual channel.

Finally, we also used wearable haptics for immersive virtual and augmented reality experiences, mainly addressing tasks related to entertainment and industrial training. In these case, we used wearable devices for the fingertips able to provide pressure and skin stretch sensations [24]. This article has also been featured in the News section of Science Magazine.

We also presented a review paper on the topic of wearable haptic devices for the hand [29].